AI literacy: Navigating regulatory compliance and fostering responsible adoption in banking

The proliferation of artificial intelligence use presents unprecedented opportunities for the banking sector, from streamlining operations and enhancing customer experience to improving risk management and detecting fraud. However, realising these benefits responsibly requires a commitment to AI literacy, both to meet forthcoming regulatory demands, and to ensure successful and ethical internal adoption. For financial institutions, a comprehensive approach to AI literacy is not merely a compliance exercise, but a strategic imperative.

Fostering internal awareness and responsible AI adoption

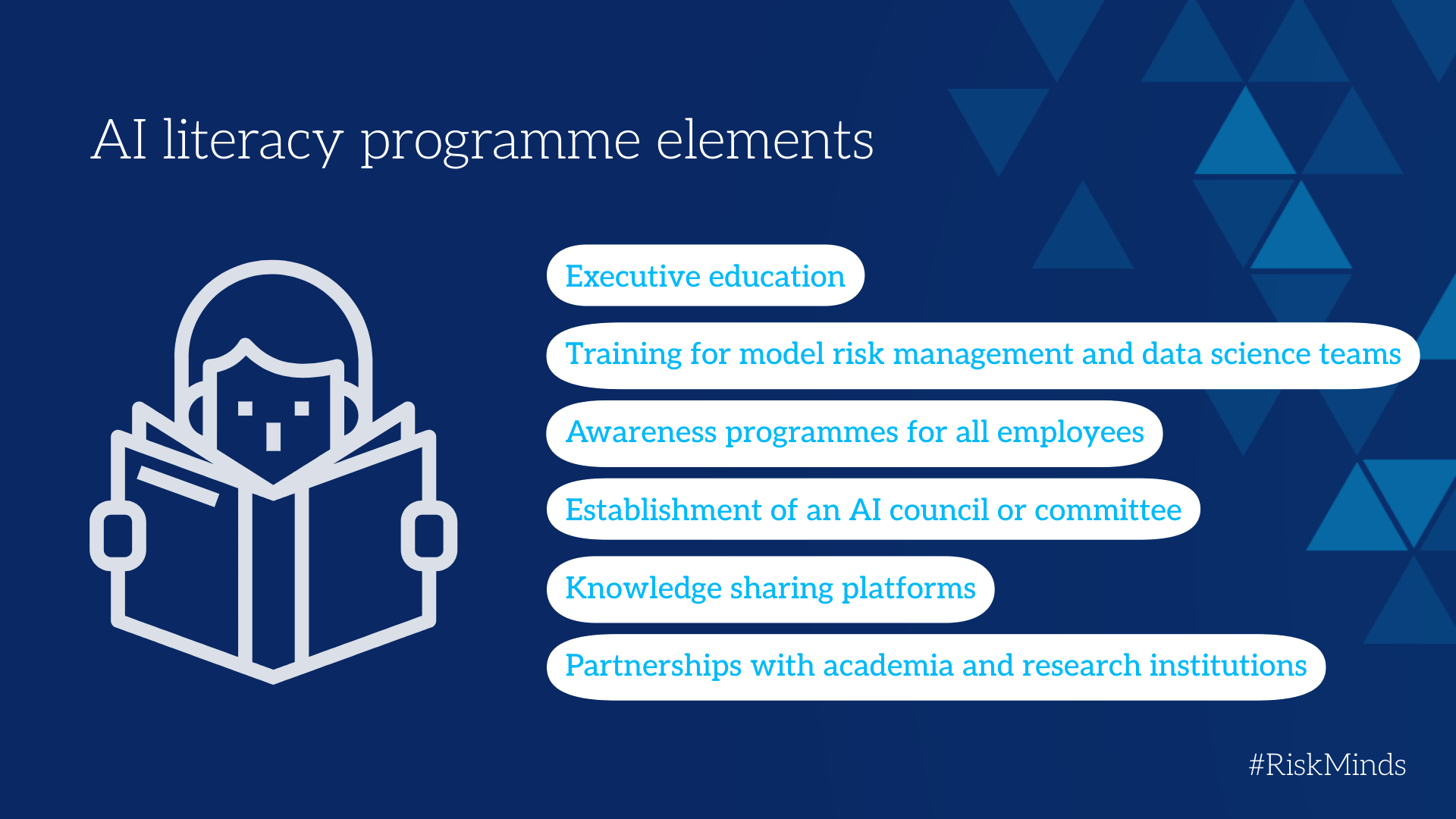

Beyond regulatory compliance, AI literacy is crucial for fostering a culture of responsible AI adoption within the bank. This involves raising awareness among all employees, from senior management to front-line staff, about the opportunities and risks associated with AI. A comprehensive internal AI literacy programme should include the following elements.

Executive education

Senior leaders need to understand the strategic implications of AI, the importance of ethical considerations, and the need for investment in AI talent and infrastructure.

Training for model risk management and data science teams

These teams require in-depth training on AI methodologies, model validation techniques, bias detection and mitigation, and XAI. They should also be encouraged to be curious and continuing education in relevant fields.

Awareness programmes for all employees

All employees should receive basic training on AI concepts, its potential impact on their roles, and the bank's ethical guidelines for AI development and deployment. This can be achieved through workshops, online courses, and internal communications campaigns.

Establishment of an AI council or committee

This committee, composed of representatives from various departments, should be responsible for developing and enforcing the bank's AI ethics policies, reviewing AI projects for potential ethical risks, and providing guidance to employees on ethical AI practices.

Knowledge sharing platforms

Create internal forums, wikis, or knowledge repositories where employees can share their AI knowledge, ask questions, and collaborate on AI projects.

Partnerships with academia and research institutions

Collaborating with universities and research institutions can provide access to cutting-edge AI research, expertise, and training programmes.

AI validation literacy for product owners

As part of the internal AI literacy programme, AI validation literacy could be considered for product owners.

Product owners play a pivotal role in shaping the development and deployment of AI-powered products. They are the voice of the customer, responsible for defining product requirements, prioritising features, and ensuring that the product meets the needs of its users. In the context of AI, product owners need a specific type of AI literacy: AI validation literacy.

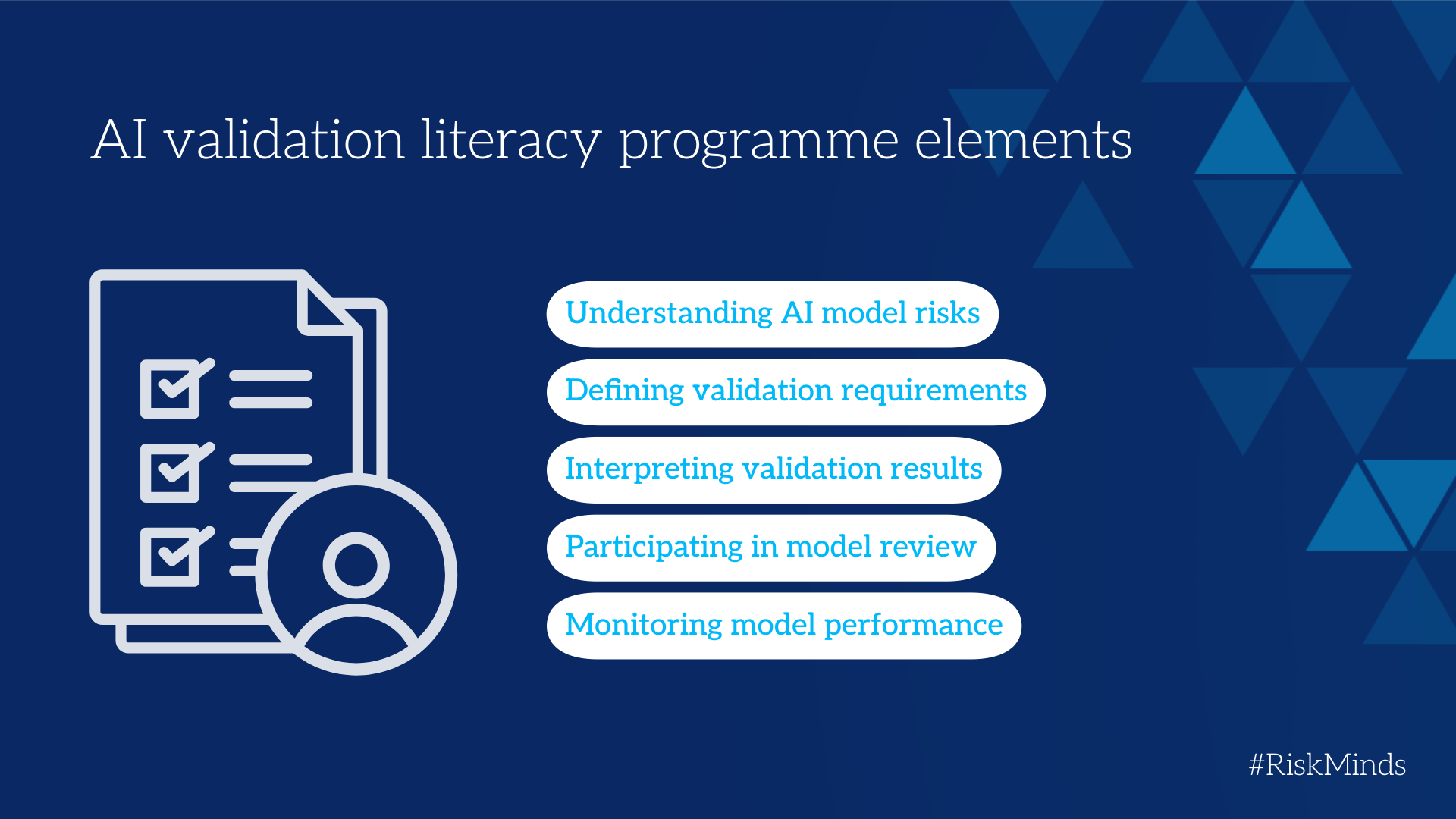

AI validation literacy equips product owners with the knowledge and skills to effectively participate in the validation and monitoring of AI systems. This includes:

Understanding AI model risks

Product owners need to be aware of the potential risks associated with AI models, including bias, fairness issues, lack of explainability, and potential for unintended consequences.

Defining validation requirements

Product owners should be able to define clear validation requirements for AI models, specifying the metrics that will be used to assess model performance, fairness, and robustness. This requires understanding the business context and the potential impact of model errors on users and the bank.

Interpreting validation results

Product owners need to be able to understand and interpret the results of AI model validation. This includes understanding statistical concepts like accuracy, precision, recall, and F1-score, as well as fairness metrics like disparate impact and equal opportunity.

Participating in model review

Product owners should actively participate in model review meetings, providing insights into the business context and ensuring that the model meets the needs of its users. They should also be able to challenge the assumptions and limitations of the model.

Monitoring model performance

Product owners should be involved in the ongoing monitoring of AI model performance, tracking key metrics and identifying potential issues. They should also be able to trigger alerts and escalate issues to the model risk management team when necessary.

In conclusion

For financial institutions, AI literacy is not just a tick-box exercise for regulatory compliance; it's a strategic investment in the future. By prioritising AI literacy across all levels of the organisation, including specialised AI validation literacy for product owners, the bank can ensure that it meets the requirements of regulation around use of AI, mitigates the risks associated with AI, and unlocks the full potential of AI to drive innovation, improve efficiency, and deliver better outcomes for its customers and stakeholders. A well-designed and executed AI literacy programme will empower employees to embrace AI responsibly, contribute to a culture of ethical AI development, and ultimately, strengthen a bank's competitive advantage in the rapidly evolving landscape of financial services. A model risk management team can play a vital role in championing this initiative and ensuring its success across the organisation.