Data management to navigate regulatory complexity

At the centre of financial services risk management and regulatory compliance is the convergence of data from a huge range of information silos, departments, product lines, customers and risk categories. Risk and finance are at the end of an extensive chain, examining the consolidated threads, uncovering issues and spotting patterns.

Because new regulation such as FRTB forces the issue, many organisations run into difficulty, unable to resolve the enormous data integration task. Without good data management, argues Martijn Groot, VP Product Management, Asset Control, obtaining the right information to provide to regulators is like trawling cloudy waters; there will always be uncertainty as to the resulting catch and its quality.

Harmonies across regulatory data requirements

The banking sector remains in the middle of reform, with investment in risk and compliance continuing to rise. The volume of regulatory alerts has risen sharply in recent years – in 2008, there were 8,704 regulatory alerts; in 2015, that figure already jumped to over 43,000 and there is no sign of abatement. This equates to a piece of news on new regulation, a standards update, a QIS, a policy document or a consultation every 12 minutes.

The biggest growth in jobs is in risk professionals to deal with successive waves of regulatory change. There are common regulatory themes: reconciling different product taxonomies and client classifications, unambiguous identification, additional data context, proving risk factors and valuation prices are grounded in market reality and, generally, requirements on audit and lineage. In addition to regulators examining the quality of risk information chains for BCBS 239, and stress testing programmes encouraging a reconsideration of processes across silos, failure to meet the requirements of FRTB will subject banks to increased capital charges.

The biggest growth in jobs is in risk professionals to deal with successive waves of regulatory change.

The sourcing and integrating of data

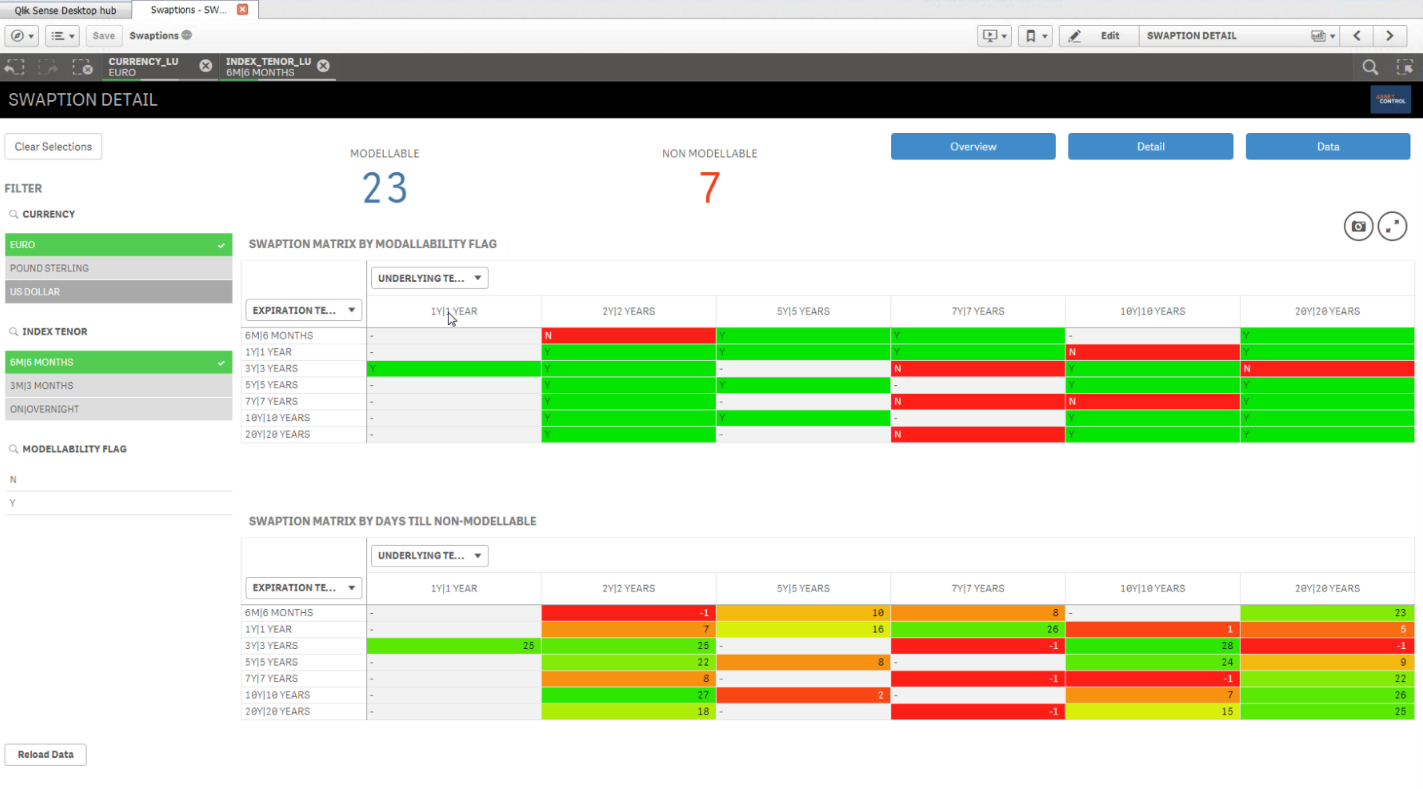

The arrival of a zero-tolerance regulatory approach towards poor data management means that banks need to source and integrate market data efficiently, derive and track risk factor histories, and manage data quality proactively. Equally as important is managing the consumption and standardisation of increasing volumes and diversity of source data, and controlling the process and distribution of consistent market data, for example including heatmaps showing the modellability of risk factors and alerting users to looming nonmodellability.

The importance of a consistent data model to anchor the processes and business rules can also not be underestimated. Sourcing clean market data continues to be a crucial challenge for risk departments and data quality and access complications can prove a waste of valuable quant time.

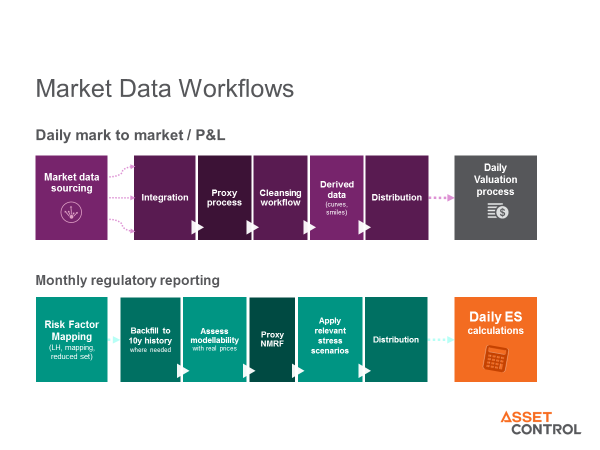

Examples of increasingly complex market data management workflows

Examples of increasingly complex market data management workflowsRegulators demand good data management and provenance to trace where a result comes from, and therefore a more structural approach to market data sourcing and quality management is vital.

Developing a common data understanding

There may be many complications around regulatory change management, but there is also a range of tools available to assist, from cross-referencing and mapping to developing smart sourcing practices. Internal agreement on terminology can often be challenging enough, with a lack of standardisation for acronyms or between desks often the source of data inconsistency. Semantics are critical to creating a single version of the truth, and data governance has become an industry of its own.

Risk and finance are the largest stakeholders of sound data governance and data quality. Positioned at the information convergence point, they must ensure each strand is accurate in itself and allocated correctly. As such, they must lead the way when it comes to good data practices.

Risk and finance are the largest stakeholders of sound data governance and data quality.

A bank’s ability to live up to the expectations of the risk data aggregation and reporting principles within BCBS 239 plays a pivotal role, through the provision of transparency and integration capabilities. The specific reports, metrics and regulatory destinations may differ, but much of the input is the same. Good infrastructure establishes common ground for regulatory mandates (whether BCBS 239, FRTB or MiFID II), and as a result, it becomes clear what external data is required as a net sum of regulation.

Data infrastructure as an enabler

As organisations scramble to adjust their practices to comply with regulatory requirements, an overriding theme is emerging: data infrastructure. Creating robust data infrastructure is the best place to start when preparing for regulation. A solid foundation for compliance with the new market risk framework of FRTB, inclusive of prices, traded prices, quotes, risk factors and quality assessments, is a precondition of effective change management. This is relevant for adjacent regulations such as PRIIPs and MiFID II, but also overall for the business. Easier access to risk modellers and data scientists using industry standard methods will reduce the model development and onboarding cycle and the corresponding cost of change.

Risk Factor Modellability Assessment prepared by Asset Control shown through QlikSense®

First and foremost, banks should look at implementing best practices in the collection, cross-referencing and integration of data, before moving on to look at data quality workflows, such as controlling and tracking proxies. Joined-up data may be the most significant shared aim of regulatory regimes – but it is yet to be adequately addressed.

A common data model for product terms and conditions, prices and risk factors, as well mapping and matching rules to cross-reference sources and taxonomies are important. At the same time, banks need the ability to configure distributions to risk and valuation systems, monitor and resolve exceptions and track the use of proxies or other actions taken on data. Cloud and NoSQL technologies are important enablers to reduce cost in the data supply chain and to make access to clean market data easier for risk managers and quants.

Conclusion

Ultimately, the most effective way to manage regulatory change is through the accurate collection, controlled sourcing, cross-referencing, and integration of data as a foundation. This addresses common regulatory demands around taxonomies, classifications, unambiguous identification, additional data context, links between related elements and general requirements on audit and lineage.

Compliance with financial services regulation cannot be treated as a box ticking exercise. To avoid regulatory change management taking over other projects, firms need to get their data management capabilities in order first – and taking the risk out of their risk data is a critical step in that process.