Derisking AI by design: How to build risk management into AI development

Artificial intelligence (AI) is poised to redefine how businesses work. Already, it is unleashing the power of data across a range of crucial functions, such as customer service, marketing, training, pricing, security, and operations. To remain competitive, firms in nearly every industry will need to adopt AI and the agile development approaches that enable building it efficiently to keep pace with existing peers and digitally native market entrants. But they must do so while managing the new and varied risks posed by AI and its rapid development.

Artificial intelligence (AI) is poised to redefine how businesses work. Already, it is unleashing the power of data across a range of crucial functions, such as customer service, marketing, training, pricing, security, and operations. To remain competitive, firms in nearly every industry will need to adopt AI and the agile development approaches that enable building it efficiently to keep pace with existing peers and digitally native market entrants. But they must do so while managing the new and varied risks posed by AI and its rapid development.

The reports of AI models gone awry due to the COVID-19 crisis have only served as a reminder that using AI can create significant risks. The reliance of these models on historical data, which the pandemic rendered near useless in some cases by driving sweeping changes in human behaviours, make them far from perfect.

In a previous article, we described the challenges posed by new uses of data and innovative applications of AI. Since then, we’ve seen rapid change in formal regulation and societal expectations around the use of AI and the personal data that are AI’s essential raw material. This is creating compliance pressures and reputational risk for companies in industries that have not typically experienced such challenges. Even within regulated industries, the pace of change is unprecedented.

In this complex and fast-moving environment, traditional approaches to risk management may not be the answer. Risk management cannot be an afterthought or addressed only by model-validation functions such as those that currently exist in financial services. Companies need to build risk management directly into their AI initiatives, so that oversight is constant and concurrent with internal development and external provisioning of AI across the enterprise. We call this approach “derisking AI by design”.

Why managing AI risks presents new challenges

While all companies deal with many kinds of risks, managing risks associated with AI can be particularly challenging, due to a confluence of three factors.

AI poses unfamiliar risks and creates new responsibilities

Over the past two years, AI has increasingly affected a wide range of risk types, including model, compliance, operational, legal, reputational, and regulatory risks. Many of these risks are new and unfamiliar in industries without a history of widespread analytics use and established model management. And even in industries that have a history of managing these risks, AI makes the risks manifest in new and challenging ways. For example, banks have long worried about bias among individual employees when providing consumer advice. But when employees are delivering advice based on AI recommendations, the risk is not that one piece of individual advice is biased but that, if the AI recommendations are biased, the institution is actually systematising bias into the decision-making process. How the organisation controls bias is very different in these two cases.

These additional risks also stand to tax risk-management teams that are already being stretched thin. For example, as companies grow more concerned about reputational risk, leaders are asking risk-management teams to govern a broader range of models and tools, supporting anything from marketing and internal business decisions to customer service. In industries with less defined risk governance, leaders will have to grapple with figuring out who should be responsible for identifying and managing AI risks.

AI is difficult to track across the enterprise

As AI has become more critical to driving performance and as user-friendly machine-learning software has become increasingly viable, AI use is becoming widespread and, in many institutions, decentralised across the enterprise, making it difficult for risk managers to track. Also, AI solutions are increasingly embedded in vendor-provided software, hardware, and software-enabled services deployed by individual business units, potentially introducing new, unchecked risks. A global product-sales organisation, for example, might choose to take advantage of a new AI feature offered in a monthly update to their vendor-provided customer-relationship-management (CRM) package without realising that it raises new and diverse data-privacy and compliance risks in several of their geographies.

Compounding the challenge is the fact that AI risks cut across traditional control areas—model, legal, data privacy, compliance, and reputational—that are often siloed and not well coordinated.

AI risk management involves many design choices for firms without an established risk-management function

Building capabilities in AI risk management from the ground up has its advantages but also poses challenges. Without a legacy structure to build upon, companies must make numerous design choices without a lot of internal expertise, while trying to build the capability rapidly. What level of MRM investment is appropriate, given the AI risk assessments across the portfolio of AI applications? Should reputational risk management for a global organisation be governed at headquarters or on a national basis? How should we combine AI risk management with the management of other risks, such as data privacy, cybersecurity, and data ethics? These are just a few of the many choices that organisations must make.

Baking risk management into AI development

To tackle these challenges without constraining AI innovation and disrupting the agile ways of working that enable it, we believe companies need to adopt a new approach to risk management: derisking AI by design.

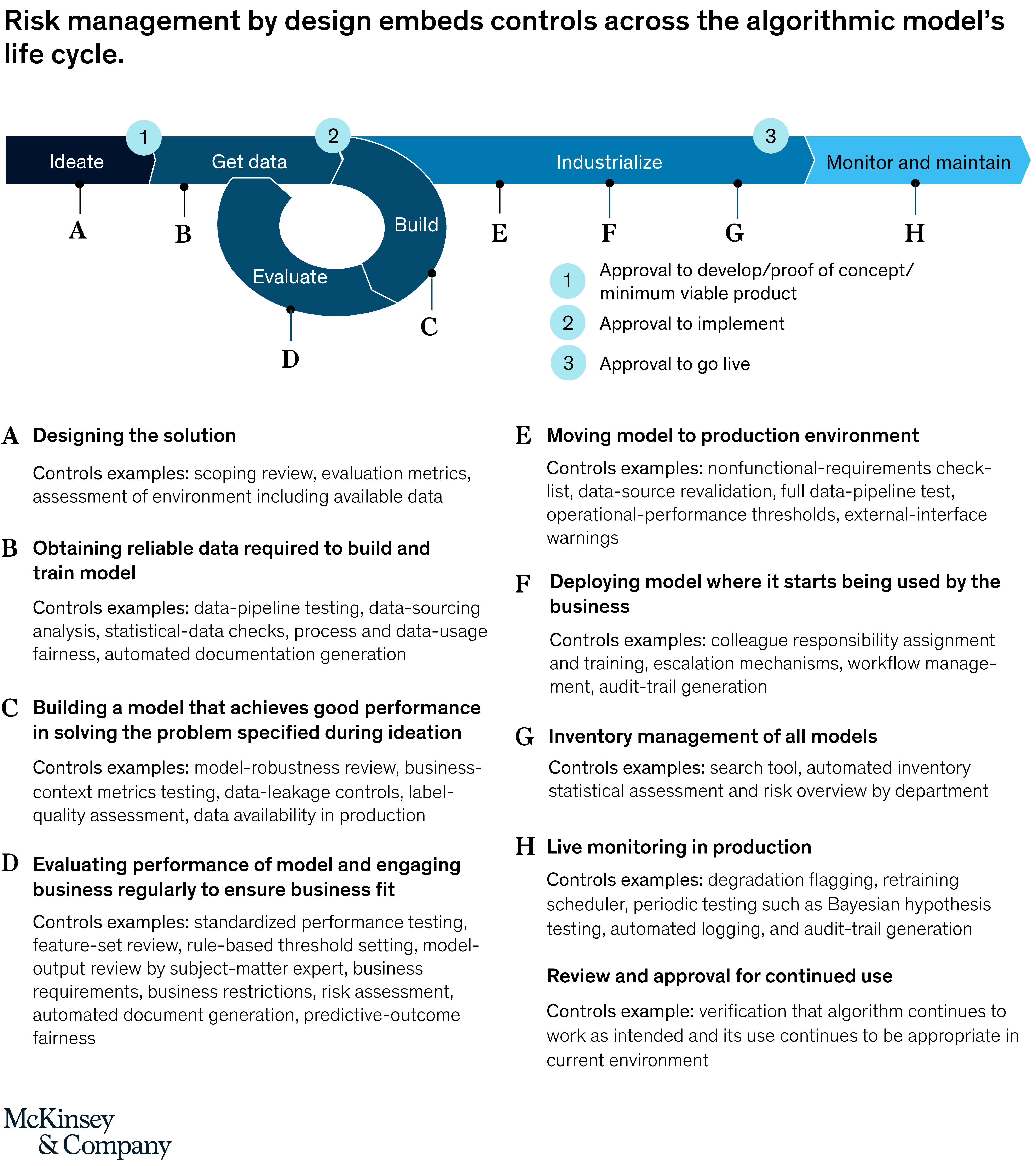

Risk management by design allows developers and their business stakeholders to build AI models that are consistent with the company’s values and risk appetite. Tools such as model interpretability, bias detection, and performance monitoring are built in so that oversight is constant and concurrent with AI development activities and consistent across the enterprise. In this approach, standards, testing, and controls are embedded into various stages of the analytics model’s life cycle, from development to deployment and use.

Typically, controls to manage analytics risk are applied after development is complete. For example, in financial services, model review and validation often begin when the model is ready for implementation. In a best-case scenario, the control function finds no problems, and the deployment is delayed only as long as the time to perform those checks. But in a worst-case scenario, the checks turn up problems that require another full development cycle to resolve. This obviously hurts efficiency and puts the company at a disadvantage relative to nimbler firms.

Similar issues can occur when organisations source AI solutions from vendors. It is critical for control teams to engage with business teams and vendors early in the solution-ideation process, so they understand the potential risks and the controls to mitigate them. Once the solution is in production, it is also important for organisations to understand when updates to the solution are being pushed through the platform and to have automated processes in place for identifying and monitoring changes to the models.

It’s possible to reduce costly delays by embedding risk identification and assessment, together with associated control requirements, directly into the development and procurement cycles. This approach also speeds up pre-implementation checks, since the majority of risks have already been accounted for and mitigated. In practice, creating a detailed control framework that sufficiently covers all these different risks is a granular exercise. For example, enhancing our own internal model-validation framework to accommodate AI-related risks results in a matrix of 35 individual control elements covering eight separate dimensions of model governance.

Embedding appropriate controls directly into the development and provisioning routines of business and data-science teams is especially helpful in industries without well-established analytics development teams and risk managers who conduct independent review of analytics or manage associated risk. They can move toward a safe and agile approach to analytics much faster than if they had to create a stand-alone control function for review and validation for models and analytics solutions.

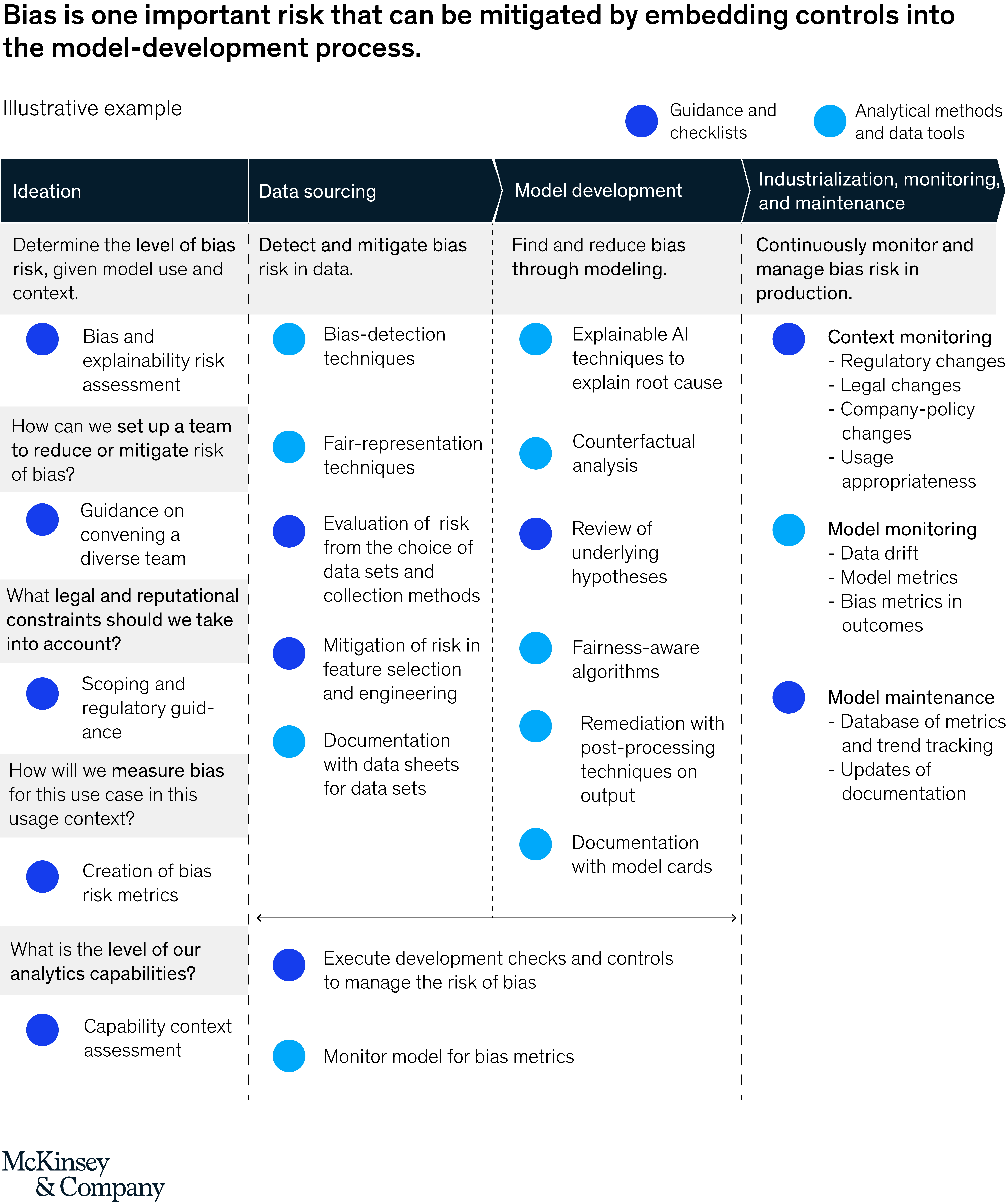

As an example, one of the most relevant risks of AI and machine learning is bias in data and analytics methodologies that might lead to unfair decisions for consumers or employees. To mitigate this category of risk, leading firms are embedding several types of controls into their analytics-development processes

Ideation

They first work to understand the business use case and its regulatory and reputational context. An AI-driven decision engine for consumer credit, for example, poses a much higher bias risk than an AI-driven chatbot that provides information to the same customers. An early understanding of the risks of the use case will help define the appropriate requirements around the data and methodologies. All the stakeholders ask, “What could go wrong?” and use their answers to create appropriate controls at the design phase.

Data sourcing

An early risk assessment helps define which data sets are “off-limits” (for example, because of personal-privacy considerations) and which bias tests are required. In many instances, the data sets that capture past behaviours from employees and customers will incorporate biases. These biases can become systemic if they are incorporated into the algorithm of an automated process.

Model development

The transparency and interpretability of analytical methods strongly influence bias risk. Leading firms decide which methodologies are appropriate for each use case (for example, some black-box methods will not be allowed in high-risk use cases) and what post hoc explainability techniques can increase the transparency of model decisions.

Monitoring and maintenance

Leading firms define the performance-monitoring requirements, including types of tests and frequency. These requirements will depend on the risk of the use case, the frequency with which the model is used, and the frequency with which the model is updated or recalibrated. As more dynamic models become available (for example, reinforced learning, self-learning), leading firms use technology platforms that can specify and execute monitoring tests automatically.

Putting risk managers in a position to succeed—and providing a supporting cast

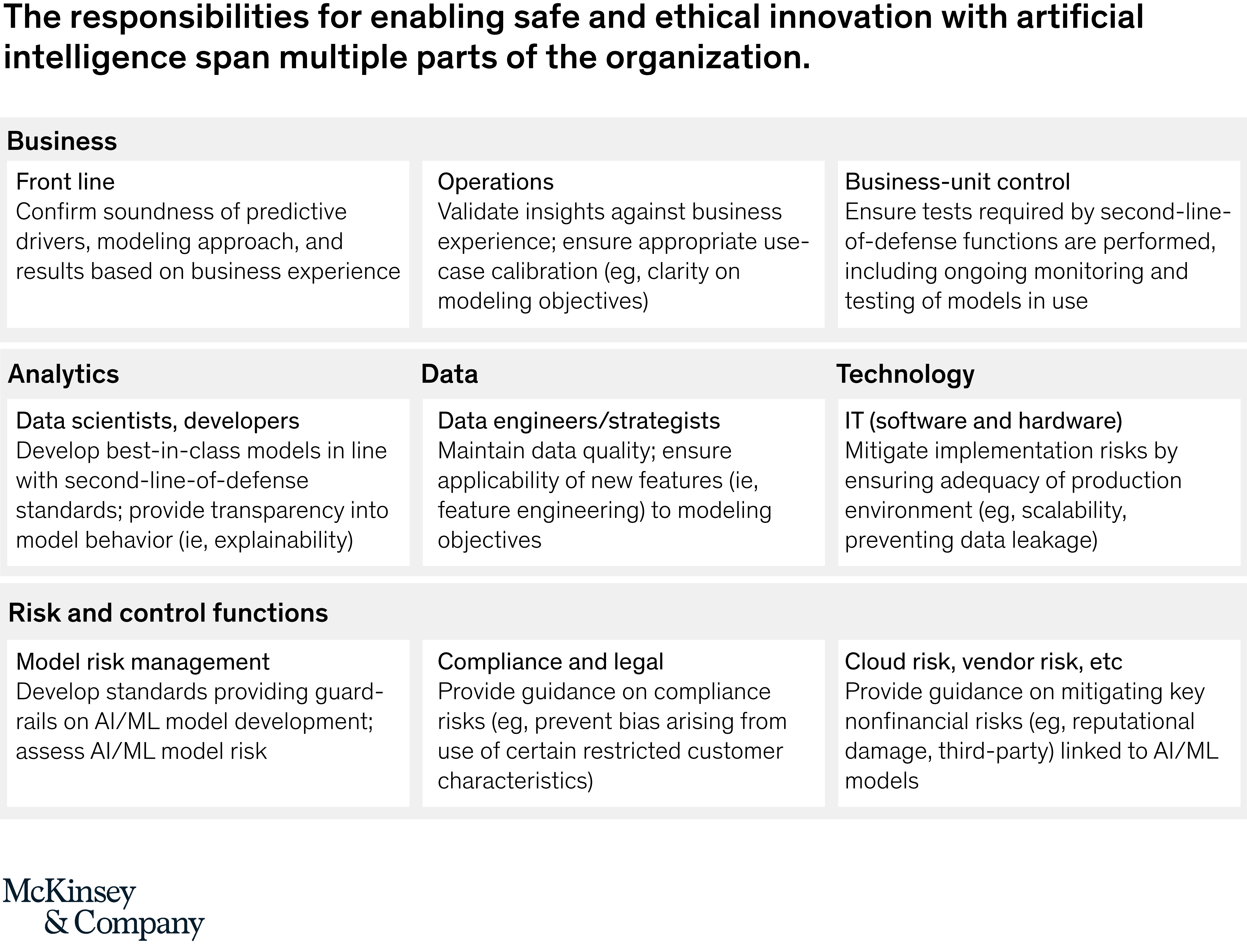

To deploy AI at scale, companies need to tap an array of external and unstructured data sources, connect to a range of new third-party applications, decentralise the development analytics (although common tooling, standards, and other centralised capabilities help speed the development process), and work in agile teams that rapidly develop and update analytics in production.

These requirements make large-scale and rapid deployment incredibly difficult for traditional risk managers to support. To adjust, they will need to integrate their review and approvals into agile or sprint-based development approaches, relying more on developer testing and input from analytics teams, so they can focus on review rather than taking responsibility for the majority of testing and quality control. Additionally, they will need to reduce one-off “static” exercises and build in the capability to monitor AI on a dynamic, ongoing basis and support iterative development processes.

But monitoring AI risk cannot fall solely on risk managers. Different teams affected by analytics risk need to coordinate oversight to ensure end-to-end coverage without overlap, support agile ways of working, and reduce the time from analytics concept to value.

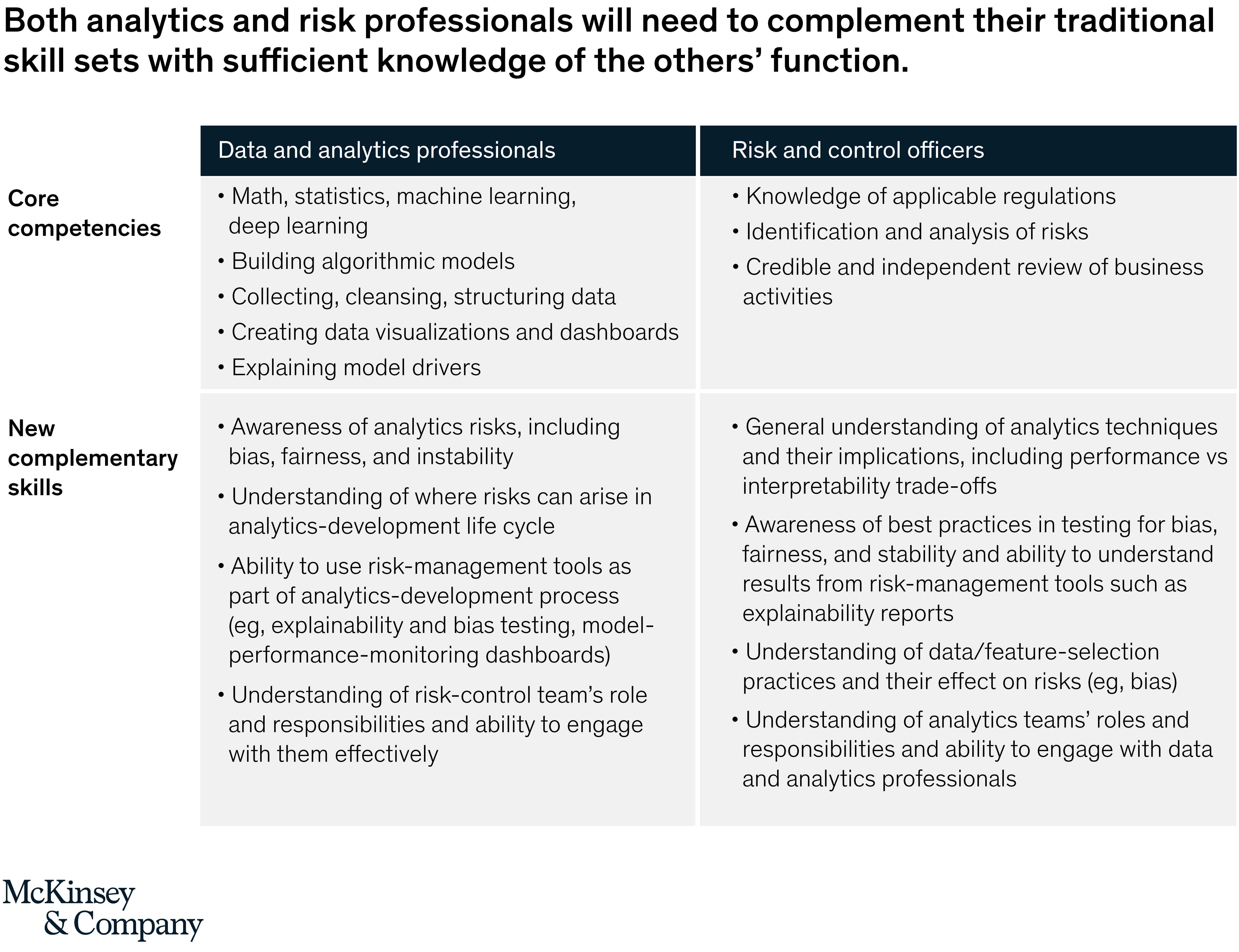

AI risk management requires that each team expand its skills and capabilities, so that skill sets in different functions overlap more than they do in historical siloed approaches. Someone with a core skill—in this case, risk management, compliance, vendor risk—needs enough analytics know-how to engage with the data scientists. Similarly, data scientists need to understand the risks in analytics, so they are aware of these risks as they do their work.

In practice, analytics teams need to manage model risk and understand the impact of these models on business results, even as the teams adapt to an influx of talent from less traditional modelling backgrounds, who may not have a grounding in existing model-management techniques. Meanwhile, risk managers need to build expertise—through either training or hiring—in data concepts, methodologies, and AI and machine-learning risks, to ensure they can coordinate and interact with analytics teams.

This integration and coordination between analytics teams and risk managers across the model life cycle requires a shared technology platform that includes the following elements:

- an agreed-upon documentation standard that satisfies the needs of all stakeholders (including developers, risk, compliance, and validation)

- a single workflow tool to coordinate and document the entire life cycle from initial concept through iterative development stages, releases into production, and ultimately model retirement

- access to the same data, development environment, and technology stack to streamline testing and review

- tools to support automated and frequent (even real-time) AI model monitoring, including, most critically, when in production

- a consistent and comprehensive set of explainability tools to interpret the behavior of all AI technologies, especially for technologies that are inherently opaque

Getting started

The practical challenges of altering an organisation’s ingrained policies and procedures are often formidable. But whether or not an established risk function already exists, leaders can take these basic steps to begin putting into practice derisking AI by design:

- Articulate the company’s ethical principles and vision. Senior executives should create a top-down view of how the company will use data, analytics, and AI. This should include a clear statement of the value these tools bring to the organisation, recognition of the associated risks, and clear guidelines and boundaries that can form the basis for more detailed risk-management requirements further down in the organisation.

- Create the conceptual design. Build on the overarching principles to establish the basic framework for AI risk management. Ensure this covers the full model-development life cycle outlined earlier: ideation, data sourcing, model building and evaluation, industrialisation, and monitoring. Controls should be in place at each stage of the life cycle, so engage early with analytics teams to ensure that the design can be integrated into their existing development approach.

- Establish governance and key roles. Identify key people in analytics teams and related risk-management roles, clarify their roles within the risk-management framework, and define their mandate and responsibilities in relation to AI controls. Provide risk managers with training and guidance that ensure they develop knowledge beyond their previous experience with traditional analytics, so they are equipped to ask new questions about what could go wrong with today’s advanced AI models.

- Adopt an agile engagement model. Bring together analytics teams and risk managers to understand their mutual responsibilities and working practices, allowing them to solve conflicts and determine the most efficient way of interacting fluidly during the course of the development life cycle. Integrate review and approvals into agile or sprint-based development approaches, and push risk managers to rely on input from analytics teams, so they can focus on reviews rather than taking responsibility for the majority of testing and quality control.

- Access transparency tools. Adopt essential tools for gaining explainability and interpretability. Train teams to use these tools to identify the drivers of model results and to understand the outputs they need in order to make use of the results. Analytics teams, risk managers, and partners outside the company should have access to these same tools in order to work together effectively.

- Develop the right capabilities. Build an understanding of AI risks throughout the organisation. Awareness campaigns and basic training can build institutional knowledge of new model types. Teams with regular review responsibilities (risk, legal, and compliance) will need to become adept “translators,” capable of understanding and interpreting analytics use cases and approaches. Critical teams will need to build and hire in-depth technical capabilities to ensure risks are fully understood and appropriately managed.

AI is changing the rules of engagement across industries. The possibilities and promise are exciting, but executive teams are only beginning to grasp the scope of the new risks involved. Existing approaches to model risk-management functions may not be ready to support deployment of these new techniques at the scale and pace expected by business leaders. Derisking AI by design will give companies the oversight they need to run AI ethically, legally, and profitably.

About the authors

Juan Aristi Baquero and Roger Burkhardt are partners in McKinsey’s New York office, Arvind Govindarajan is a partner in the Boston office, and Thomas Wallace is a partner in the London office.

The authors wish to thank Rahul Agarwal for his contributions to this article.

This article was originally published on McKinsey.com on August, 13, 2020, and is reprinted here by permission.

Copyright © 2020 McKinsey & Company. All rights reserved.