Stop Speeders, Cheaters, and Bots From Putting Your Survey Data Quality at Risk

Successful market researchers know that to tell a powerful story that is both credible and brings customer insights to life, they must back up their arguments with relevant statistics from a high-quality research study. But ensuring that a survey delivers high-quality data can be difficult, especially when respondents are sourced from broad general audience panels, aren’t required to have their identities verified (for privacy reasons), or are offered monetary incentives.

Even when data is collected from a reputable sample provider and you are practicing good survey design techniques, some survey respondents will still race through the answers. This jeopardizes survey quality and may even invalidate survey findings. These unqualified, inattentive, or even fraudulent respondents must be filtered out or they can add unnecessary noise to collected data.

This filtering process can be accomplished by using attention checks, also known as trap questions, at strategic points in the survey. In addition to filtering out inattentive respondents, they also can filter out bots, people who are being dishonest in their answers, or those who don’t even speak the language.

Benefits of trap questions

Based on data collected from GroupSolver’s own studies, implementing trap questions can identify and filter out the 15-30% of respondents that typically should be disqualified because they are not answering carefully – usually because they are simply not paying enough attention. The questions are not intended to trick respondents or test their knowledge; each should be easy and obvious to answer. Rather, they are inserted at strategic points in the survey to filter out respondents who aren’t taking the survey seriously.

Trap questions should be used in every survey – even when respondents come from a very specialized and highly managed communities. Although bad respondents typically come from general population panels, high-cost specialized panels can suffer from inattentive respondents, as well. For this reason, we always recommend independent validation of respondent quality inside of every survey through the use of trap questions.

Trap questions that screen for inattentive respondents can be asked using both open-end and multiple-choice questions, though multiple-choice questions have traditionally been a little bit easier to implement. This was because open-ended questions previously required researchers to manually review, code, and categorize answers provided in the traditional text box.

However, the latest survey platforms apply natural language processing (NLP) to eliminate this chore. NLP and other tech-enabled techniques can be employed to quickly process the free-text answers, remove duplicates and noise, and only keep those that are unique and meaningful. Using these techniques to ask open-ended trap questions makes it easier to flag, in real-time, those respondents who have provided gibberish or zero value statements and who therefore should be terminated from the survey, so they do not provide data of dubious quality.

But here, I’d like to focus on multiple-choice trap questions. There are various types that can be used in any survey, and they should be tailored to test different types of problematic respondent behaviors.

How to implement trap questions

Ideally, more than one trap question should be used in each survey. Reviewing data from a sample of GroupSolver’s recent studies, the first trap question identifies the nearly 12% of all survey takers, on average, who are inattentive. If a second trap is inserted, an additional 7% of the total respondent pool is generally caught. With a third trap question, another 5% of the respondents have been identified. These inattentive respondents must be identified as soon as possible and terminated from the study or they will contaminate the results.

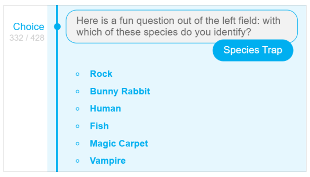

Here’s an illustration of how to use trap questions, using a recent Election 2020 study (N = 332, general population sample from one of the most prominent panel providers). In this case, three trap questions were included primarily to filter out inattentive respondents. For example, one asked: “With which species do you identify?” and presented the choices as Rock, Bunny Rabbit, Human, Fish, Magic Carpet, or Vampire. Any respondent who was paying attention would choose the obviously correct answer: Human. Of the full sample, 68 respondents answered the question incorrectly and were terminated from the study.

An example of a trap question.

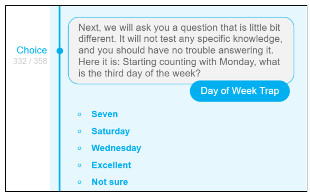

An example of a trap question.In the same study, respondents were also asked: “Starting counting with Monday, what is the third day of the week?” The choices were: Seven, Saturday, Wednesday, Excellent, or Not Sure. The correct choice, Wednesday, was only easy to answer if the respondent took the time to read the question and the options, especially since there was a random mix of choice options listed. Eighteen respondents answered the question incorrectly and were, therefore, disqualified from completing the study.

[caption id="attachment_1044333" align="aligncenter" width="310"] A day of the week trap question example.

A day of the week trap question example.

These two trap questions, along with a third one included in the study, terminated a total of 183 respondents, or 35% of the study participants. While this was an unusually high rate of bad respondents, it demonstrates the importance of quality control measures inside the survey.

Maximizing your research study investment and value

Market research takes considerable time, money, and effort to conduct, and low-quality or dishonest survey answers can quickly ruin the integrity of the resulting insights. This, in turn, can tarnish the credibility of your work and lead to poor decision-making on behalf of your business.

Mitigating this risk requires that survey responses come from qualified and attentive respondents. While no technique is 100 percent effective, using even the quick and simple strategy of deploying trap questions will help improve the quality of data and the confidence that the derived insights are valid and actionable.

About the Author

Rasto Ivanic is a co-founder and CEO of GroupSolver® – a market research tech company. GroupSolver has built an intelligent market research platform that helps businesses answer their burning why, how, and what questions. Before GroupSolver, Rasto was a strategy consultant with McKinsey & Company and later he led business development at Mendel Biotechnology. During his career, he helped companies make strategic decisions on developing and managing new businesses, pursuing market opportunities, and building partnerships and collaborations. Rasto is a trained economist with a Ph.D. in Agricultural Economics from Purdue University, where he also received his MBA.

Rasto Ivanic is a co-founder and CEO of GroupSolver® – a market research tech company. GroupSolver has built an intelligent market research platform that helps businesses answer their burning why, how, and what questions. Before GroupSolver, Rasto was a strategy consultant with McKinsey & Company and later he led business development at Mendel Biotechnology. During his career, he helped companies make strategic decisions on developing and managing new businesses, pursuing market opportunities, and building partnerships and collaborations. Rasto is a trained economist with a Ph.D. in Agricultural Economics from Purdue University, where he also received his MBA.