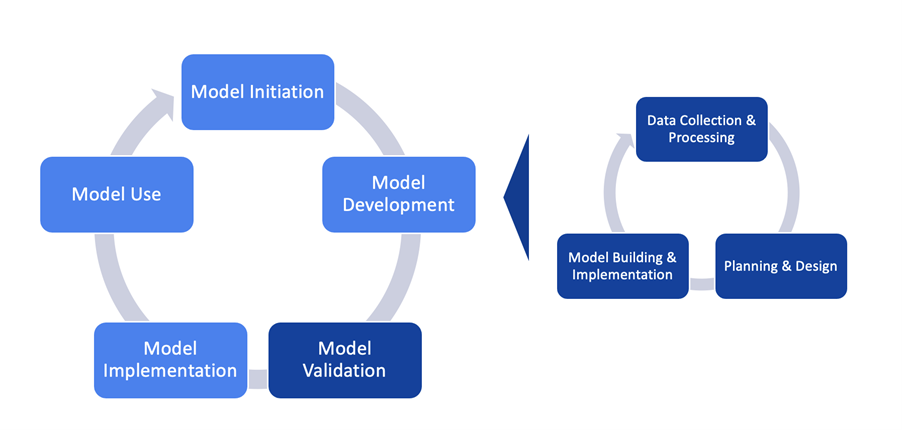

Validation of artificial intelligence (AI) models in the financial sector has been one of the most crucial phases of AI models’ life-cycle (Figure 1). Although the industry is highly regulated and already familiar with validating traditional statistical methods in credit risk, these need an extension and adaptation to their as-is validation standards for advanced AI algorithms. Extension to validation steps is not necessarily limited to credit risk, but can also apply to divergent business domains. The importance of validation steps and the need for a special framework for AI models are also highlighted by recent discussion papers by the European Banking Authority [1].

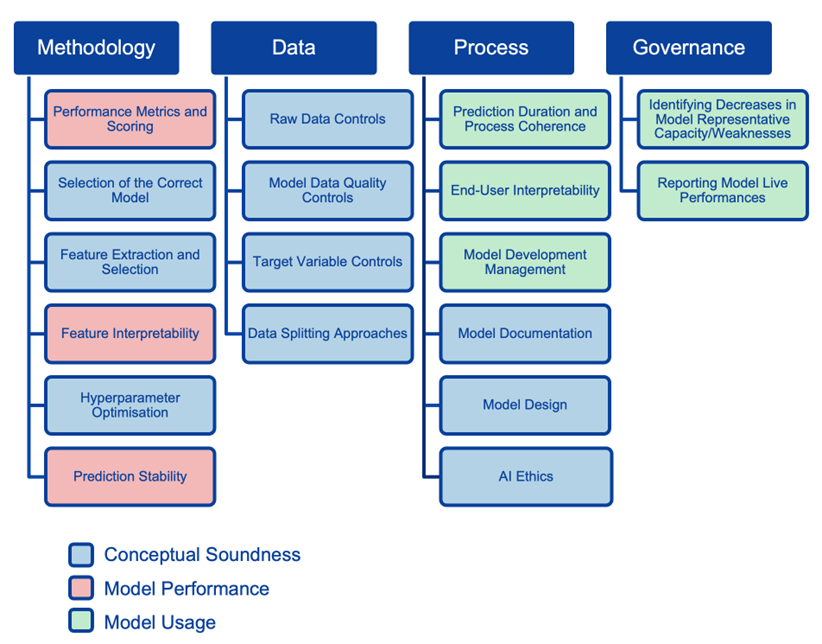

Prometeia’s AI Model Validation Framework takes care of the risks of using AI in financial applications and provides significant controls for those risks. Besides, our framework’s main pillars (Data, Methodology, Process, Governance) are mapped to well-known validation aspects like conceptual soundness, model performance, and model usage (Figure 2).

Figure 1 - Model life-cycle

Our main motivations behind meeting industry needs are:

Improving adoption of AI with creating “trust”

Adoption of AI in banking and finance applications are increasing, but still limited. Creating more awareness by standardisation of models’ validation can quicken the early adoption of AI. Developing “trust” on adoption of AI models is trivial. Bank of England and OECD reports [3] underline that 44% of models are in pre-deployment phase and only 56% of them are in deployment phase. One way of improving trust is to validate them before going into production.

Compliance with legislation frameworks & standards

It has become a good opportunity to create awareness about the need for an internal procedure that ensures AI models are ethical, unbiased and explainable with regard to legislations like EU “AI Act”, or “EBA Discussion Papers on Machine Learning for IRB Models”. This strengthens the arguments to convince the industry and empower ownership mechanisms.

Improving internal procedures and tasks

AI Model Validation Framework also allows CDOs and CTOs to overview their available model development procedures. Risk mitigation is handled with a standard validation approach so that deployment processes are smoother and aligned.

How to handle validation complexity of AI techniques?

Our framework has arisen from Prometeia’s long-lasting experiences in modelling and utilisation of advanced analytical approaches to different business domains. As Prometeia, we composed a novel framework that reflects not only quantitative but also qualitative validation considerations. The framework also defines the acceptance criteria and model scoring rules differentiating AI model validations as initial or periodic. Therefore, our guideline provides state-of-the-art practices for scoring a model.

We differentiate AI Model Validation Framework so that these aspects are ensured:

- Besides quantitative outcomes: ethical compatibility, robustness, fairness and bias issues;

- Conditional validation checks related to different types of algorithms (hyper-parameters) and feature engineering methods (time-series or dimension reduction or data augmentation);

- “Human-in-the-loop” strategy;

- Explainability and feature interpretability;

- Monitoring, continuous learning (if any) and governance of the models after production deployment.

We also differentiate our offering with:

- Empowering each stakeholder (model developers, validators, end-users) to coordinate life-cycle of models including AI Model Validation as a novel part of it;

- Providing quantitative validation libraries (like overfit/underfit detection, data quality controls, PSI and performance metric comparisons between train, dev, test, oot datasets);

Our AI Model Validation Framework validates models at every step of the machine learning (ML) / AI model life-cycle, from data creation to deployment.

Under conceptual soundness, quality of model design, construction and documentation are assessed. We focus specifically for ML-only steps in methodology suitability: selection of the correct model, feature extraction/selection and hyper-parameter optimisations. Our framework emphasises specific considerations, like privacy, bias, quality and ethical (compliant with EU AI Act) controls.

The model performance phase involves comparing model outputs against expected outcomes. Every algorithm is questioned choosing correct metrics & scoring to compare results, feature interpretability/explainability and bias & variance concepts to detect overfitted or underfitted models.

The model usage phase focuses on the design of digitising processes with ML models, and the "human-in-the-loop" strategy for end-users. Precautions such as model governance, monitoring and reporting mechanisms, and continuous-learning techniques are also examined.

Figure 2 - Mapping of AI model validation concepts

Final remarks

We have been working on embedding our AI Model Validation Framework within our model risk management platform, which provides end-to-end management of the model life-cycle.

By integrating our AI model validation solution within this platform, we can provide clients a more seamless and efficient process to validate their AI models, by reducing the time and the effort required to validate them and improving their overall model governance.

You are all invited to the presentation of our Model Validation Framework at RiskMinds 2023 in London (November 15, 15.30). You can find out more and book your spot here.

References:

- “Machine Learning for IRB models: Follow-Up Report From the Consultation on the Discussion Paper on Machine Learning for IRB Models”, August 2023

- OECD, Artificial intelligence, machine learning and big data in finance: Opportunities, challenges, and implications for policy makers (2021).

- B. of England, Machine learning in UK financial services (2022).