Management of artificial intelligence risks

Moving further towards a technologically advanced and proficient future, the model risk management community is grappling with the challenges of complex artificial intelligence models and their governance. Summarising his experiences with AI, Peter Plochan, Principal Risk Solutions Manager, SAS, shares the key challenges of AI applications and advises on their management.

Across industries, analysts expect a dramatic increase in adoption of artificial intelligence (AI) technology over the next few years. In financial services specifically, the appeal of AI technology is strong and growing, outpacing many other industries.

But what about the challenges of AI technology? Artificial intelligence adds more fuel to the existing fire within banks’ modelling ecosystems. One reason is that it requires increased emphasis on core areas that already demand significant attention – such as data quality and availability, model interpretability, validation, deployment and governance. This consideration can make banks hesitant to move full speed ahead with their AI projects.

At the same time, some factions of the industry are calling for a cautious approach toward AI. Leading the pack are model risk management and model governance professionals responsible for protecting their banks from losses caused by use of improper or inaccurate models. Their concerns are underscored by the recognition that all AI models – not just those used for regulatory purposes – will eventually end up in the bank's model inventory, under their domain. And all those models will need to be governed.

-----

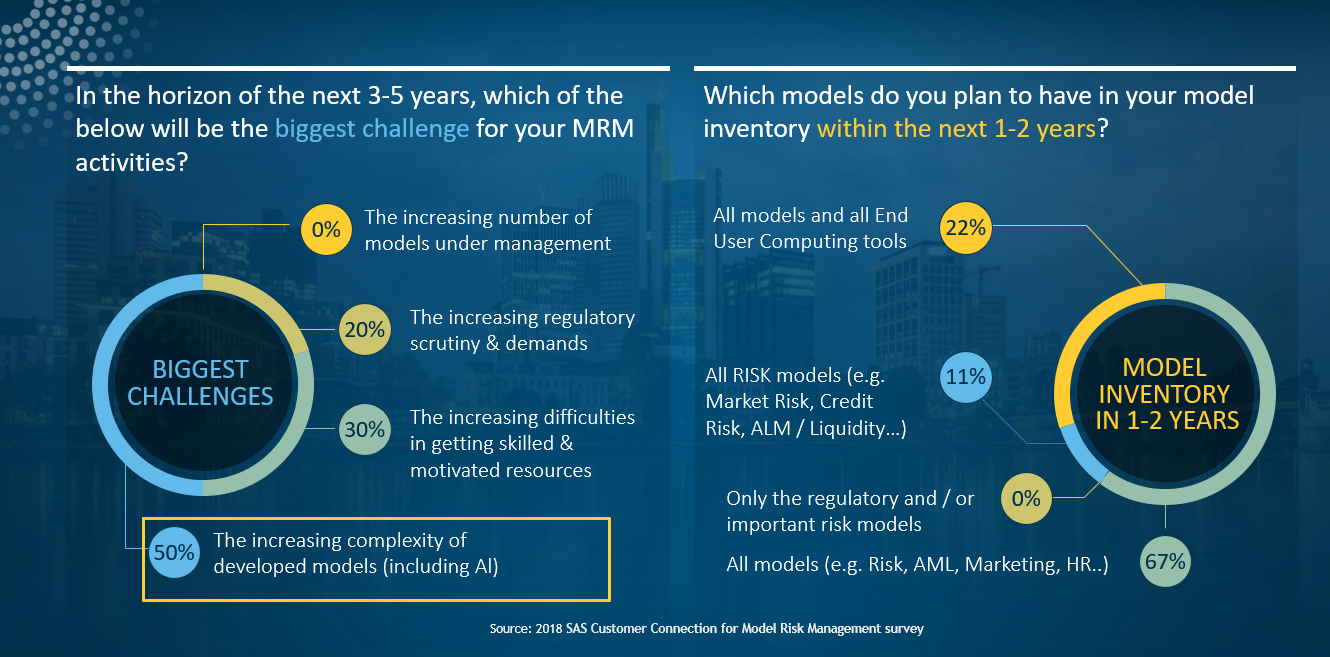

Figure 1: Perspective on AI from the MRM industry leaders | Source: SAS Model Risk Management Customer Connection event - survey

According to our survey (Figure 1) performed among the MRM industry leads attending the SAS MRM Customer Connection event:

- In the short-term horizon (1-2 years), they plan to include all models used within the bank into their model inventory and model governance, thus including all AI models as well, irrespective whether they are used in Risk, Finance, Marketing or anywhere else.

- In the long-term horizon (3-5 years), the increasing complexity of models (AI) is the biggest challenge for their MRM activities.

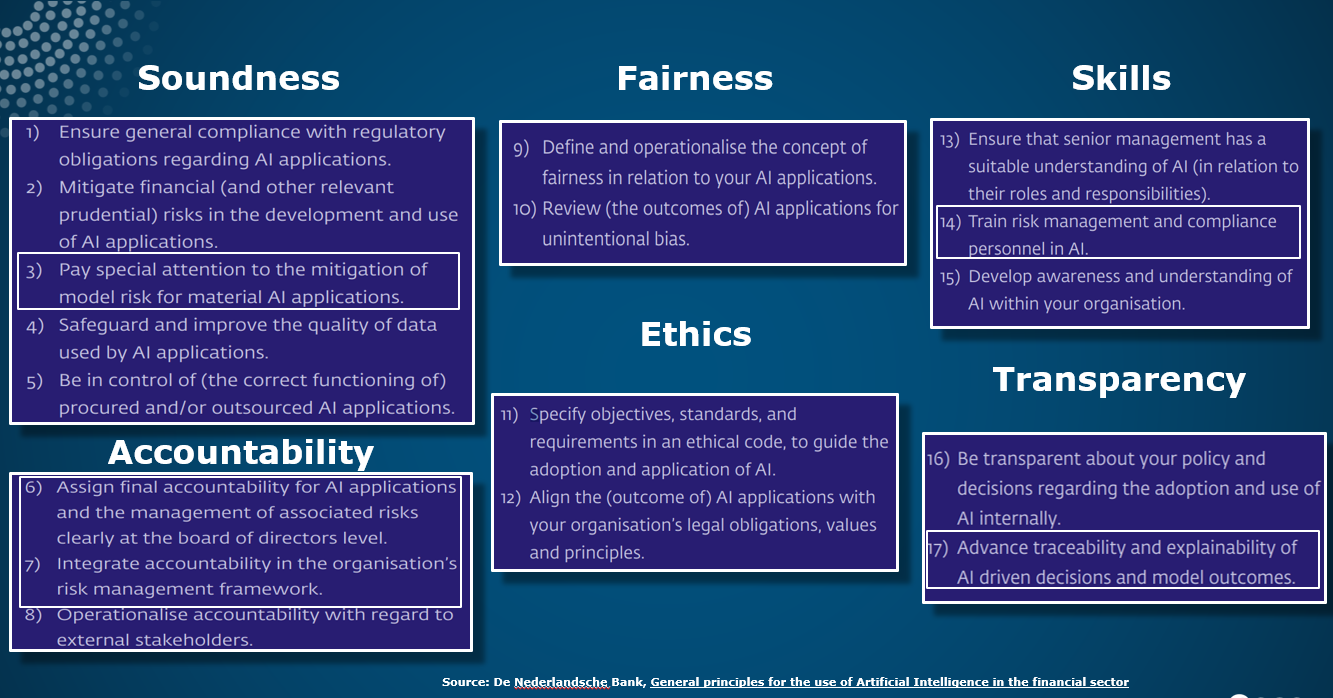

In parallel, the banking regulators who are also concerned about the risks that using AI models entails are taking first actions. For example, the Dutch Central Bank (Figure 2) and the Danish Financial Supervisory Authority recently issued general principles and recommendations for use of AI in financial services.

-----

Figure 2 | Source: De Nederlandsche Bank

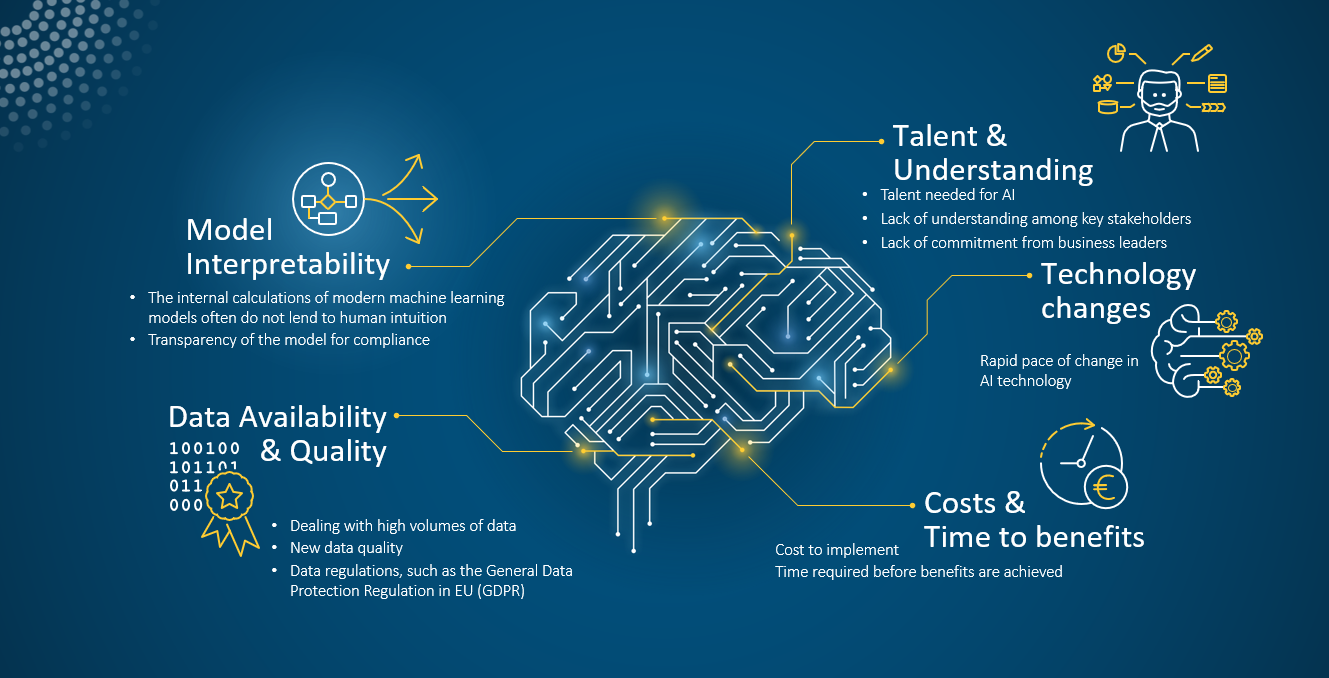

As the industry increasingly adopts AI, it becomes essential to know how to confront the challenges raised by this promising technology – and with the right approaches, that may not be as complicated as you think. Let’s examine five of the most common stumbling blocks banks will need to address on the road to AI mastery (Figure 3), along with five overarching “fixes” that can keep those obstacles from halting AI progress and in the end mitigate the AI related risks.

-----

Figure 3: Key challenges of AI adoption | Source: SAS and GARP: Artificial intelligence in banking and risk management survey

The challenge #1: Model interpretability.

The fix: Create explainable AI.

Neural networks and other machine learning models are quite complex, which makes them harder to understand and explain than traditional models. This naturally leads to some degree of risk and demands an increased level of governance.

To avoid fines and remain compliant but also to minimise any bad business decisions taken based on AI models, banks must be able to explain their models and the rationale behind them – in-depth – to regulators, auditors but also to the internal stakeholders. Fortunately, there are several things you can do that make it easier to understand and explain complex neural networks and other ML models:

- Introduce a visual interpretation of modelling logic to clearly illustrate all the model’s inputs, outputs and dependencies.

- Establish a central model governance and model management framework. This will avoid creation of multiple, parallel AI “islands” within the organisation, each doing its own thing.

- Use high-performance computing capabilities to automatically perform variable importance and sensitivity analysis when needed and be sure to stress test the inputs to AI models.

- Perform frequent benchmarks, and regularly compare champion to challenger models.

The moment when your data scientist leaves, all of the above will help you to retain the critical know-how & IP about your complex models in-house.

The challenge #2: Data availability and quality.

The fix: Feed a steady supply of healthy data.

For good reasons, AI technology is data-hungry. AI and ML models can consume vast amounts of big data, and they improve automatically through this experience – that is, by learning. The result is greater accuracy and predictability over time. But to no one’s surprise, the adage “garbage in, garbage out” still applies. How can you make sure you’re feeding your AI technology healthy data?

- Manage data well. If you provide AI technology with a steady supply of trusted, good-quality information, your models can make good recommendations. Getting it right entails setting a high bar for governance. That includes following best practices for data preparation and having specific measures for, and processes to ensure, data quality. These practices should be an integral part of your end-to-end modelling landscape, so your data will be ready for use in advanced analytics and modelling.

- Take full advantage of the latest technology – such as automation and distributed data storage – and make sure you can support all the different formats and structures AI models need.

The challenge #3: Cost and time to benefit.

The fix: Jump-start AI through focus.

Too often, teams leap into an AI project without thinking through the rationale or implications. The reality is that some problems can be solved with traditional business intelligence tools and won’t significantly benefit from adding artificial intelligence. Other times, the rationale for an AI project isn’t clear, so it lacks buy-in from executive-level champions. You can decrease the time and cost of getting benefits from your AI technology by taking the following approach:

- Always think through the business problem you want to solve before you choose your methodology or technology.

- Start small – but do so with an eye toward how you can scale the project. Then roll it out enterprise-wide when you’re ready.

- Reuse existing resources, both human and technical. Rely on your existing talent with business knowledge as much as possible rather than always hiring costly, external data scientists that often lack the understanding of your operations.

- Establish one way of working across the entire enterprise, both at the process and technology levels.

The challenge #4: Talent and understanding.

The fix: Humanise AI.

To succeed with AI, you’ll need the right people to design and run your projects. Key stakeholders and decision makers should be able to clearly express what you’re trying to accomplish with AI, and what effects each AI project will have on people inside and outside the business. But how do you attract and retain the right talent – people who understand your goals and can help “humanise” AI?

- Make it a priority to get the right resources and nurture them along the way. Attracting – and keeping – top-notch resources could be an ongoing struggle. It helps if you embrace concepts like citizen data scientists, open source, automation and standardisation. It’s also important to give your data scientists the tools they need and to support their preferred ways of working. In turn, they’re more likely to stay engaged with what they’re doing and focus on how they’re adding value.

- Promote transparency and continually build awareness about AI and model risk management. This helps build trust and makes it easier to rally support to successfully establish AI where it’s needed throughout the business.

The challenge #5: Continual technology changes.

The fix: Operationalise AI.

How do we move abstract concepts about AI off the drawing board and out of data scientists’ heads so they can be used in daily operations? Here, technology choice plays a crucial role. You’ll need an AI platform that can:

- Automate labour-intensive manual processes within your end-to-end modelling life cycle.

- Offer the level of performance needed to make use of the latest technologies, such as GPUs, containers, edge or cloud deployment, and LIME and ICE model interpretation frameworks.

- Centralise model governance and apply it across all models (not just AI models) – as well as the entire modelling life cycle.

- Integrate with existing systems and be reusable for other purposes.

What’s next?

The next wave of AI is coming, and it’s coming fast. As the number of AI models in development and deployment rises, model risk management teams will feel the crunch.

Whether the pressure to manage evolving model risks comes from internal or external stakeholders, institutions should start now to establish model risk management processes that are AI-ready. Then they can proceed with AI initiatives in an efficient, governed manner. As questions about AI models surface, institutions that have taken the time to evaluate and adopt “fixes” for their AI challenges will reap the benefits of their proactive approach.